I’ve recently decided to develop an iOS application to learn more about AR development in Unity. This blog will be used to take notes and document design decisions I must make. Let’s get started.

I began my journey on Ray Wenderlich’s website. This Ken Lee AR Foundation tutorial on the site was a great way to find necessary resources and to understand more about Unity’s unique approach to merging all AR SDKs into one with AR Foundation.

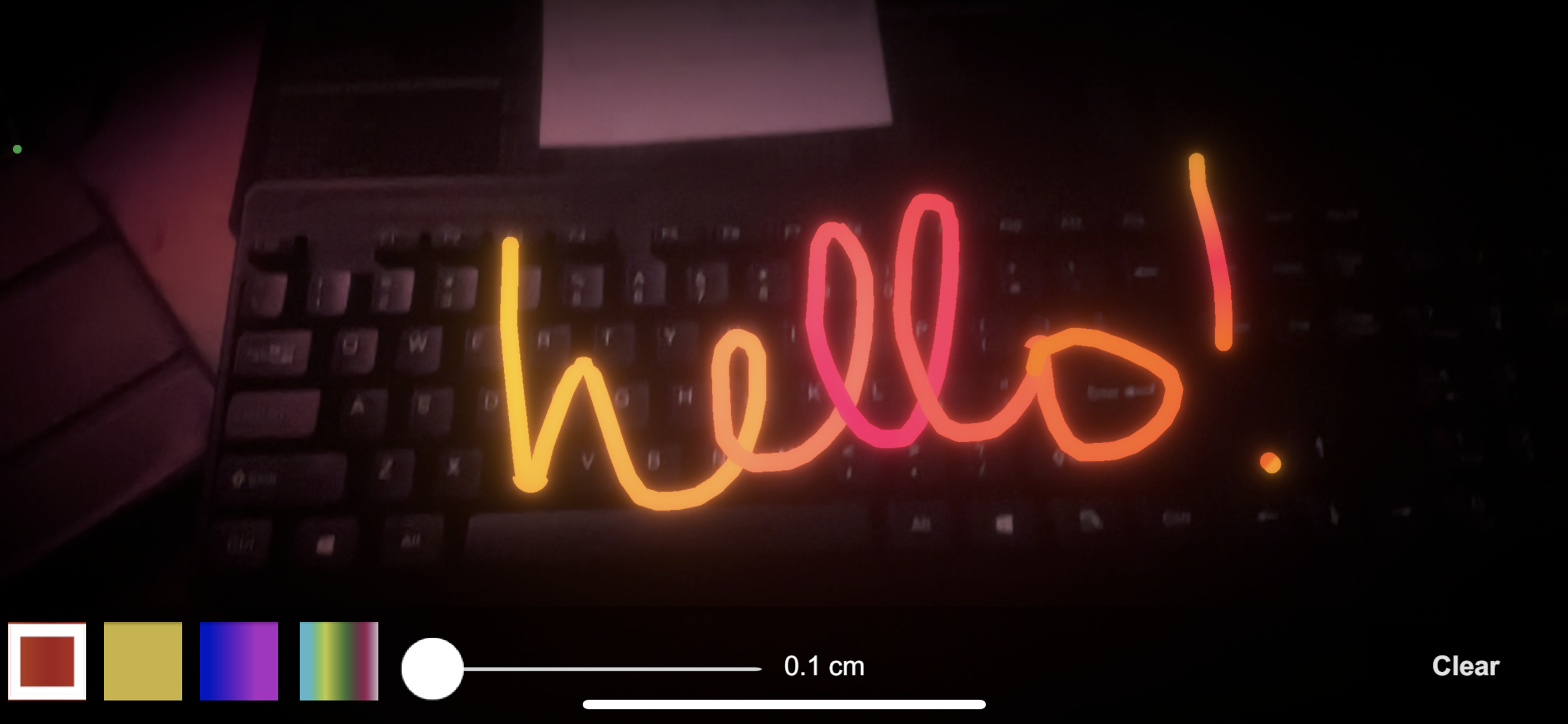

After completing the tutorial, I have the following AR features running on my iPhone: AR Plane Manager that detects surfaces through the device’s camera, AR raycast logic that detects the player’s touch input, and simple “Doodle” logic so the player can draw lines of different colors and thicknesses on the detected AR surfaces.

The app’s Doodle logic will not start until the camera detects an AR surface.

The Doodle logic allows the player to choose the color and thickness of the line they can draw with their finger. It also clears all lines with the “Clear” button on the right.

The tutorial finishes up with some post-processing effects like the vignette effect visible in this screenshot.

While testing the app’s builds, I ran into my first problem. The UI at the bottom of the screen features a slider for the player to choose the thickness of the line they draw. Using this slider would often trigger my phone’s app-switching feature, so I decided to move the UI to the top of the screen.

The slider often switches the AR app to the previously used app.

So the UI is moved to the top of the screen for now.

Next, I would like to be able to draw on my face, so I want to implement a “Camera Flip” feature and face tracking features.

Unity’s AR features are quite buggy for me in 2020.2.2, so I have to keep uninstalling and then reinstalling some XR packages just to get basic XR features to appear. This is troubling when I try to build and magically the XR features are missing. The built app launches, but does not automatically fire up the rear-facing camera as it used to.

On the subject of cameras, Unity’s AR Foundation uses an enumeration (CameraFacingDirection) to choose between no camera, world camera, and user camera. World camera is the rear-facing camera, and user camera is the front-facing camera. I added an AR Camera Manager and a simple script to try to control the device’s camera, but to no avail. More research needed to debug this feature a bit more.